Bitsletter #8: Enhancing Data Science Workflows & Lightweight Web Development

🧠 ML Tip: Optimize experiment velocity

What should it be if you had only one thing to improve in your Data Science/Machine Learning projects?

Experimentation velocity ⚡️. It's one of the most rewarding factors in your final solution’s performance.

Experimentation velocity is your capacity to do experiments quickly while efficiently managing their configurations and outcomes. Indeed, projects are time-limited, and the challenge is to get the best solution before the deadline. You won't likely nail the perfect model and preprocessing in your first attempt. So you need to try many ideas efficiently.

Experiment velocity has three critical components you can improve on.

Infrastructure: your infrastructure needs to be on point, especially working in a team. Fast and efficient access to data (e.g., via cloud buckets), data warehousing to easily extract what's needed (e.g., BigQuey, Spark, ...), ...

Modeling: machine learning reached maturity in various tasks with numerous readily available models. They even have public pre-trained weights in some cases. There are tools with pre-made configurations for multiple tasks that bring together models, pre-trained weights, and preprocessing. They will save you tons of time and significantly impact the experimentation velocity. For instance, Lightning Flash is a high-level deep learning framework. It handles many tasks, with models and weights loading, fine-tuning, ...

Experiments management: You shouldn't overlook experiments' reproducibility and tracking. What's the point of doing many experiments if you can't compare them and restore their configuration to get the best model? You should be able to reproduce an experiment quickly, even if you ran it weeks ago. Leverage tools like DVC, MLflow, ... to track your experiments and consistently maintain reproducibility. Data Science and Machine Learning are trial/error in essence. Your solution's performance depends on how many ideas you can try while maintaining a rigorous history of the outcomes. 🚀 Improve your experimentation velocity and see significant progress in your future projects. 🚀

🌐 Web Tip: Less is more, set bundle size budgets

Less is definitely more for web applications. But less what, to get more of …?

Less bundle weight for better loading times, better user experience, and more users, including those with the poorest connections.

We covered this point in previous episodes: reducing the quantity of JavaScript and assets you ship to the user drastically improves the web vital metrics (Time To Load, Time To Interactive, Largest Content Paint, ...). And better web vital metrics mean better SEO since Google now considers it when indexing your website.

You can use tools like Bundlephobia to proactively check the bundle size of third-party dependencies before including them in your project. Do you really need this 1 MB library in your web application? Is it worth the price of slowing down your website?

It can quickly get out of control as your project evolves. But there is a solution: bundle size budgets in your CI pipelines. In addition to your tests and linting, you can assert that your application bundle size is below a given threshold. By doing so, you ensure to keep your application size in control and maintain a consistent user experience. Less is more, and we should keep it in mind when developing web applications.

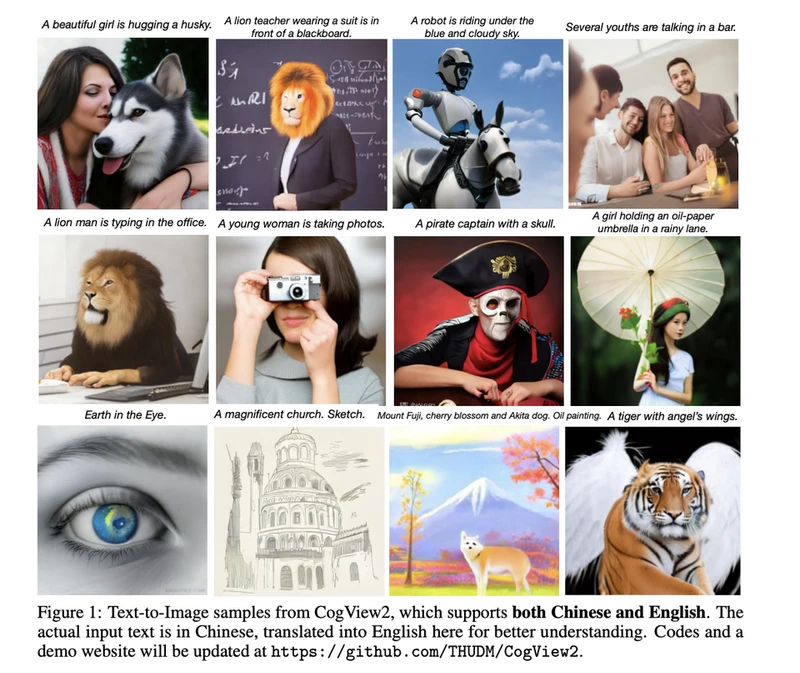

👩🔬 Research Paper: CogView2, Faster and Better Text-to-Image Generation via Hierarchical Transformers

OpenAI DALL-E 2, Google Imagen, ... autoregressive models shine in text-to-image generation. However, they raise a new problem: generation speed.

🎆 Generative Adversarial Networks (GANs) dominated generation tasks for a while. Unfortunately, these models are notoriously hard to train: mode collapse, instability, high parameters sensitivity, ... However, they achieve great results, even in high resolution, and generate images quickly. Lately, autoregressive generative models have gotten significant attention.

Models like OpenAI Dall-E 2 or Google Imagen achieved astonishing results in text-to-image generation. 💡 They use diffusion, a technique where the models map the prior distribution (noise) iteratively to the desired distribution (images). They are autoregressive since the generation happens in a transformation sequence: at each timestep, the model uses the previous results to produce the current output. It does so until it completes the generation. It has some compelling advantages: training stability, better generation results, ...

But compared with GANs, the generation takes many steps which considerably increase the generation time. 🚀 CogView2 is an autoregressive generation model for text-to-image tasks.

They introduced techniques to circumvent the issues, like hierarchical transformers and parallelism (which is harder to leverage for autoregressive models because of their dependency on previous timesteps).

Autoregressive models showed excellent capabilities and deserve more research. CogView2 introduced techniques to alleviate the slow generation problem, which was a barrier to their improvement.

🛠 Tool: i18n

Internationalization can be mandatory depending on the web applications that you build. Also, it helps reach as many users as possible, especially in countries where your primary language is poorly understood.

However, it can be a pain to implement. Fortunately, there is i18n to save your day! Working with JSON files containing your translations, you can integrate i18n with any frontend framework as a complete internationalization solution:

- Detect the user language

- Load the translations

- Optionally cache the translations

- Extension, by using post-processing

Don't fear internationalization again 😉.

📰 News

Blender 3.2 Release: Unleash your creativity

Blender is one of the best open-source tools for 3D modeling and rendering. The team released version 3.2 with many features:

- Light groups: a new render pass that contains only a subset of light sources

- Shadow caustics: render shadow caustics for a stunning and realistic touch

- Volume motion blur: Gas simulation and imported OpenVBD volumes now support motion blur

- ... and many more

GitHub expands charts for all plans

GitHub expands the insights features to all plans.

You can use Charts to track cycle velocity, current work status and access complex visualizations like Cumulative Flow Diagrams. Additionally, you get access to time-based charts helpful for visualizing trends.